GNU Screen + Irssi + PuTTY for Symbian

A match made in heaven.

Using IRC in a reliable way has turned out to be kind of a challenge for me in the past couple of months. I had my cellphone connected 24/7 to the IRC channels I needed to idle in. Unfortunately, I don’t live in a 3G country so any voice-calls interrupted the whole thing.

I also wanted to use my laptop for IRC-ing whenever I was at home. But the inconsistent internet connection didn’t make thing any easier. There was always this lingering fear of missing important messages during one of the disconnections. I couldn’t foresee a solution which would fix all of the mentioned issues until someone recommended using Irssi along with GNU Screen.

This not only fixed every little issue I had ever had with IRC but also made full utilization of my love for all things command-line. In summary: I now have this “permanent” IRC session running at an SSH server in Lithuania. Whenever I feel like it, I can “attach” my laptop or my mobile and start using Irssi. If I receive any voice-calls, the IRC session still continues to work and I can “reattach” later on. Simply put, the session continues running even when I’m not attached to it through either device and at any time of the day I can simply connect to it and resume working through that day’s IRC activity.

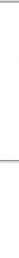

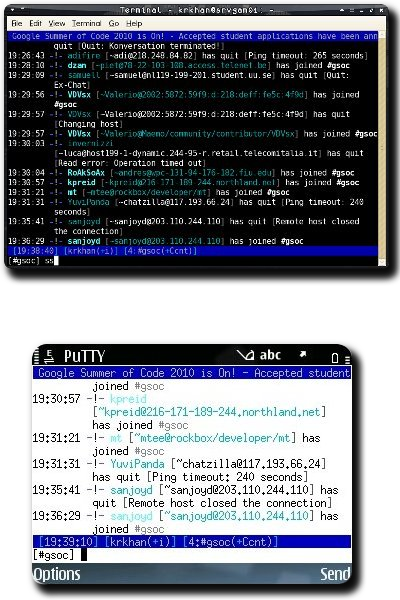

Here’s a screenshot that shows me connected to the #gsoc channel on Freenode first on my laptop and then on my E71 using the same screen session:

PuTTY for Symbian is used for SSH-ing on the Nokia phone. If I ever rank top 5 situations where CLI absolutely pwns GUI in terms of efficiency and usage, this nifty setup is definitely going to make the list.

Tags: Command-line, GNU, IRC, Irssi, Open Source, PuTTY, Screen, Series 60, Symbian, Technology